(...continued from Part 1)

The status of an instance changes to Running as it boots. It usually takes another ten to fifteen minutes before the instance is ready to accept logins. In this example, I will be creating a master node and a single slave. While these terms may not be used in the OpenSTA documentation, I define master node as the server that holds the test repository. The master node is used to control all aspects of a test using OpenSTA Commander as the primary interface.

Once started, both VMs are identical with the exception of their IP address and network name. To help me keep track of which server is which, I use the "tag" function to name each of instance. Right mouse on a server in the instance pane and choose "add tag". I'll designate the first one as master and the second as slave1 to help me keep track of their roles.

For each of the instances started, EC2 creates both internal (private) and external (public) addresses. For machines to talk to one another within the EC2, they must use either their private IP address or DNS name. To connect to these instances from outside the EC2, use the public IP or DNS name. If you right mouse over an instance, you can view its details, copy the details to the paste buffer, or simply "connect to instance" which starts the remote desktop program (RDP) and points it at the server you selected. You can also "get password" for a newly created AMI if one has not been assigned.

The first step in configuring the new instances is to connect to the master node using RDP. One of the first things you will be greeted with is a message that the OpenSTA name server has exited and you will be asked if you want to send an error report. Just cancel the dialogs. Whats happening here? When OpenSTA was installed on the AMI, it took note of the server name and ip address of the machine it was installed on and it remembers and uses that information to connect to the repository which holds all scripts, data, test definitions etc. The error message occurs because when the instance we just connected to was booted, it was assigned a new IP address. The name server (the background process that handles all the distributed communications) can't reach the repository due to stale information about the IP address of the repository host.

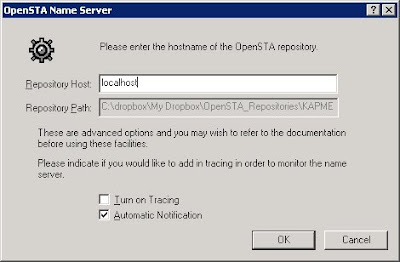

The fix is trivial, but must be done each time a system (master or slave) is started. It is also important what order the name servers are fixed and restarted. Starting with the master node, login, dismiss the error message, then right mouse on the name server icon in systray (looks like a green wheel) and select CONFIGURE. Enter 'localhost' in the dialog box for "repository host".

Note that I have also moved my repository to a different directory (c:\dropbox\...). When an AMI is started, the C: drive reverts to the state it was in when the AMI was bundled. Any changes we make to the contents of C: will be LOST when this instance is shutdown. Rather inconvenient to create scripts, run tests, do all sorts of analysis only to have the files lost after we shut down. I have opted to use free software (dropbox) which replicates the contents of the dropbox directory (on a local drive) to a network drive (no doubt somewhere in dropbox's cloud). On my office PC, I run dropbox to replicate from this network drive to a local drive. This replication is bi-directional. There is rudimentary conflict resolution at the file level, but no distributed locking or sharing mechanism at the file record level. Any changes to the repository on the EC2 instance is replicated to my PC and vise-versa. This allows me to use my PC as a work bench for scripting and post test analysis using my favorite tools and use the cloud for running large tests. More about this in a future post.

Ok, back to configuring the name server on the master node. After entering localhost in the Repository Host field, click the OK button. You must now restart the name server. To do so, right mouse on the name server icon and select "shutdown". Once the name server icon disappears, launch the name server again (start->All Programs->OpenSTA->OpenSTA Name Server). Verify it is configured correctly by right mousing on the name server icon and selecting "registered objects". You should see something like this:

Note the value 10_209_67_50. It is based on the EC2 private ip address for the master instance we just configured. Remember the IP address 10.209.67.50. It is the master node IP address and we are going to need it in a few minutes as we repeat this process on each slave instance we started. Remember, the master node holds the repository. The only difference between a master node and a slave node is that a slave node has a remote server IP or Network name as a "Repository Host" in the name server dialog box.

Next, create an RDP session to slave1. I prefer to RDP from the master node to each of the slaves. If using a lot of slaves, run elasticfox on the master node, highlight ALL slaves in the instances pane, right mouse over the multiple selected instances and select Connect To Instance. This will start as many RDP sessions as you have slaves. All you need to do is manually type in the user name and password.

Upon logging in to each slave, you will be we are greeted with the same error message about the name server exiting. Repeat the steps outlined above to reconfigure the slave's name server but this time specify the master node's private IP address (in this example 10.209.67.50) as repository host in the name server configuration dialog. Next shutdown the name server and restart it. Give the Slave's name server a couple of minutes to complete its process of registering with the master node.

This process needs to be repeated for every slave instance. Once you have logged into the slave, leave the RDP session going since logging out will stop the name server. I prefer to initiate the RDP sessions from the master node, and keep just one RDP session from my PC to the master up to keep clutter at a minimum. Keep in mind that if you disconnect the RDP session the remote login will remain. Just don't log off from the remote slave. There may be a way to run the name server as a service, but that will take more work. Should I find a way, I will blog about it and likely rework this portion of the guide.

When finished with all the slaves, we can use OpenSTA commander on the master node to verify what servers have joined this cluster of master and slaves. To do so, in commander, tools->Architecture Manager which shows the following display. Note the top node is master, the slave we just configured appears below it. Focusing on any node in the list shows details like computer name (important, we'll need this later), OS info, memory, number of processors, etc. Here is what it looks like;

The top server in the list is the master. The second node is our first slave. Clicking on any server in the list will display information about that system including its computer name, OS info, memory available, etc.

This process takes about 5 minutes to configure a handful of servers. The process sounds complicated but can be summed up succinctly as; Connect to the master node and configure the name server's "repository host" to be local host and restart the name server. Next, connect to each slave and configure the name server's "repository host" to be the (private) IP address of the master node and restart the name server. Verify all nodes are configured correctly with menu option tools->Architecture Manager in Commander.

At this point, you are ready to run multi-injector tests in the cloud which we will do in my next installment.

(continued in part 3)

Bernie Velivis, President Performax Inc

Thursday, December 17, 2009

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment